Database for JRC CbM

DB in the CbM architecture

Sentinel images offer the possibility to monitor in real time and on a continuous basis the conditions of the agricultural parcels and their coherence with the declarations made by the farmers within the Common Agricultural Policy (CAP). At the same time, this huge amount of data poses technical challenges for the extraction and handling of the information (signatures) that is relevant for the Checks by Monitoring (CbM). In this respect the database (DB) plays a central role in the demonstrative JRC CbM architecture, as it is the tool used to store and manage the data involved in the process (except satellite images).

Satellite data are made available in the Object Storage of the Copernicus Data and Information Access Services (DIAS) infrastructure and processed in that environment by Python-based modules. The base layers (particularly, parcels and image metadata, the latter generated by the DIAS) are stored in a database installed in the Member State (MS) DIAS space, inside the same environment of the satellite image archive. The database also stores the signatures (i.e. number of pixels, mean, std, min, max, 25th percentile, 50th percentile, 75th percentile) that result form the intersection of satellite image bands and the parcels. This information can then be used by analysts to verify the consistency of the farmer declarations against the conditions detected by the satellites.

In the JRC CbM system, the open-source Relational DataBase Management System (RDBMS) PostgreSQL with its spatial extension PostGIS are used as database software. S(patial)RDBMS can efficiently manage very large spatial and non spatial datasets with complex structure in a multi-user and secure environment. In this documentation page, we introduce SRDBMS, we describe the database that has been set up for the JRC CbM, particularly for the Outreach project, we describe how to access, retrieve and export data stored in the DB. In the last section, we illustrate some procedures to optimize performance.

In an operational national Paying Agency (PA) CbM system, a relational database can be used not only to support signatures extraction and management data but is also a good candidate as central repository for all the information relevant for the CAP process. In such a context, the technical solution depends on the specific goals and constraints of each PA.

Learning database administration and advanced use (CbM backend) require a technical background and dedicated training, while for basic interaction (data retrieval and visualization, CbM frontend) limited expertise is needed. The scope of this documentation is to show how the database is used in the JRC CbM system and demonstrates its potentialities to support CbM.

Spatial database in a nutshell

In computer science, a database (or DB) refers to a set of data organized in such a way as to facilitate its management, use and updating, stored in a computer. The relational model is a logical model for structuring data in a database. All data are represented as relationships (tables) that are linked together. The data is manipulated with the operators of relational algebra using the SQL (Structured Query Language) language.

Spatial database are database that can manage the spatial attribute (raster or vector) of an object.

The main features of (spatial) relational databases are:

Storage capacity

Retrieval performance

Server/client structure (modular approach)

Remote access

Concurrency control

Permission policy

Data formalization

Relational environment (data modelling)

Data integrity controls

Data consistency (normalization)

Data preservation

Easy automation of processes

Integration with other data repository

Industrial standard

Cost effective

Backup/recovery

Efficient management of spatio-temporal data

Mature technology

In a nutshell, we can define relational database as a tool to securely store and preserve large volume of standardized and consistent data (including spatio-temporal data) that can be efficiently retrieved by multiple remote users with different interfaces with no data duplication.

Main database elements

The basic element of a database is called a TABLE. It is composed of columns and rows, but unlike what happens in spreadsheet, a table is declaratively created with a structure: each column has a defined DATA TYPE, and the rows (also called records) must respect this type: the system enforces this constraint, and does not allow the wrong kind of data to slip in.

Some of the frequently used data types are: integer for whole numbers, numeric for decimal numbers, text for character strings, date for dates, boolean for yes/no values, timestamp for attributes containing date and time. Each data type has specific properties and functions associated. For instance, a column declared to be of a numerical type will not accept arbitrary text strings, and the data stored in such a column can be used for mathematical computations. By contrast, a column declared to be of a character string type will accept almost any kind of data but it does not lend itself to mathematical calculations, although other operations such as string concatenation are available.

The number and order of the columns is fixed, and each column has a name. The number of rows is variable — it reflects how much data is stored at a given moment.

Each row of a table must be identified by the value of one or more columns. The same value (or set of values) cannot be repeated in two different rows. The attributes that identify a record are called PRIMARY KEY. The primary key must be explicitly defined for all the tables (although this is not strictly required to create the table, it is necessary for a correct use of the database).

Tables can be linked to one another (the jargon term for this kind of link is relation, which accounts for the R in RDBMS): you can explicitly ask that the value to put in a specific record column comes from another table by using FOREIGN KEYS. This helps reduce data replication (redundancy), and explicitly keeps track of inter-table structure in a formalized way.

A database contains one or more SCHEMAS, which in turn contain tables. Schemas also contain other kinds of objects, including data types, functions, and operators. The same object name can be used in different schemas without conflict. Schemas are analogous to directories at the operating system level, except that schemas cannot be nested. Schema are used:

to allow many users to use one database without interfering with each other

to organize database objects into logical groups to make them more manageable

third-party applications can be put into separate schemas so they do not collide with the names of other objects

It is worth mentioning another very useful feature offered by database: VIEWS. Views are queries (i.e. SQL statements, see SQL subsection) permanently stored in the database. For users (and client applications), views work like normal tables, but their data are calculated at query time and not physically stored. Changing the data in a table alters the data shown in subsequent invocations of related views. Views are useful because they can represent a subset of the data contained in a table; can join and simplify multiple tables into a single virtual table; take very little space to store, as the database contains only the definition of a view (i.e. the SQL query), not a copy of all the data it presents; and provide extra security, limiting the degree of exposure of tables to the outer world. On the other hand, a view might take some time to return its data content. For complex computations that are often used, it is more convenient to store the information in a permanent table.

The spatial bit

Until a few years ago, spatial information was managed and analysed exclusively with dedicated software (GIS) in file-based data formats (e.g. shapefiles). In spatial databases, the spatial component of an object (e.g. the boundaries of a parcel) is managed as one of its many attributes and stored in a column. From a data management point of view, spatial information is no different from a date or a quantitative measure (number). The spatial extension adds spatial data types (vectors such as points, lines, polygons, curves, in 2 or 3 dimensions, and raster) to the standard data types that store other associated (non-spatial) attributes of objects. It also introduces spatial indexes to improve performance involving these attributes.

Spatial data types can be manipulated with the SQL language through a comprehensive set of functions to analyse geographical components (e.g. calculate the area, reproject into a different system), determine spatial relationships (e.g. the intersection of two spatial objects) and modify geometries (e.g. create the line of a transect from individual plots). I The list of functions available in a spatial database is very extensive. A set of very common functions is defined by the OGC SFSQL. This essentially allows GIS functionality to be built using the capabilities of a relational database integrating spatial and non spatial data in the same environment.

A spatial database does not replace GIS software, especially for visualisation, map creation and some advanced functions, but facilitates the integration and management of spatial data with other available information. Moreover, spatial data are generally based on widely used shared standards, which makes the exchange of data between different platforms simple and straightforward and allows a seamless integration between spatial databases and GIS software, which can be used as database clients.

To visualise spatial data, like all other data in a database, a client application is needed that requests the data from the server and displays it to the user in the required format. For spatial data, the best client is QGIS. PgAdmin provides a very fast way of displaying spatial objects in a table, although it does not offer the functionality of GIS software.

PostgreSQL and PostGIS

PostgreSQL represents the state of the art as regards relational databases, and in particular spatial databases thanks to its extension PostGIS. First of all, this is open source software. This has the following advantages:

Use of standards

Interoperability with other tools

No vendor-lock policies

No limitations in its use

No costs for licenses

PostgreSQL and PostGIS have been chosen as database software for JRC CbM because they are characterized by:

Great spatial tools for data management and analysis

Geography data type, raster, topology, 3D, …

Great non-spatial tools for data management and analysis

Good documentation

Fast development

Native support by many software

Supporting, collaborative and active (large) community

Strong commercial support

Multi-platform support

Many procedural languages

Stable and secure

Mature project with long history

Used by many large companies

Another important PostgreSQL feature is the possibility to scale it beyond running on a single server, exploiting cloud based infrastructures. There are many possible approaches, for example replication, database clustering and connection pooling. Based on your specific requirements you can identify the solution that best fit them. Many companies provide commercial support for advanced PostgreSQL high performance, multi-server solutions.

As relational database are based on industrial standard, it is usually easy to move data from a specific software to another. Many other relational database software exist. PostgreSQL is the recommended choice, but if a database already exists in the organization that wants to implement a CbM system, it is probably better to keep the same platform. Other popular database with a spatial extension are for example MySQL, SQLServer and Oracle. For local, simple and single-user database SQLite with its spatial extension SpatiaLite is also a possibility (this is usually non the case for CbM).

Relational database is not the only option to store and manage data in a “modern” database. NoSQL database (as opposed to relational databases based on SQL) offers different features compared to relational database. It is more suited for contexts where the evolving data structure requires a flexible data model. Given the well structured and defined database schema of CbM, and because data integrity and consistency is better granted by SQL database, we opted for a solution based on relation database. However, given its ability to scale up easily, the NoSQL database can be considered as an alternative in specific cases.

SQL

SQL (Structured Query Language) is the universally used data definition and manipulation language in relational databases, i.e. the way for user to interact with SRDBMS. It does not require sequences of operations to be written, but to specify the logical properties of the information sought (declarative language). SQL statements are used to perform tasks such as retrieve data from a database (in this case they are commonly called “queries”) or update and create database objects. SQL is highly standardized and while each database platform has some kind of SQL dialect, it can be used with any SRDBMS software with minimal changes. While complex queries can be hard to design, SQL itself is a simple language that combines a very limited sets of commands in a way that is similar to the natural language.

You can run SQL commands, explore database objects and see tables using any client that is able to connect with your DB (i.e. most of the software that deals with data). The reference graphical interface for PostgreSQL database management and query is PgAdmin. Psql is the interactive terminal for working with Postgres (command line).

The operation of choosing the records from a table or a combination of tables is called selection: the SELECT command allows you to express clearly which columns you need, which rows, and in which order; you can also generate computed data on the fly. The basic structure of a query is the following:

SELECT

<one or more columns, or * for all columns of a table>

FROM

<one or more tables>

WHERE

<conditions to filter retrieved records on and to combine multiple tables>;

Some practical examples are provided in the section on database access. Many tutorials on SQL are available on the web. Among the others:

JRC CbM DB structure

The primary goal of the JRC CbM database is to store and make available the time series of Sentinel bands signature for each parcel. This is calculated by a Python module by rasterizing parcel polygons stored in the database and intersecting them with satellite images stored in the DIAS Object Storage. Images are selected and retrieved based on the metadata provided by the DIAS and stored in the database (particularly the area covered and the acquisition date and time). Basic statistics are calculated from the set of pixels belonging to the each parcel, which are then stored back in the database. The number of pixels with cloudy flags for Sentinel 2 is extracted for each parcel in order to identify images affected by atmospheric disturbance (and thus with not reliable statistics). This information is also stored back in the database. To sum up, the main database objects (tables) are:

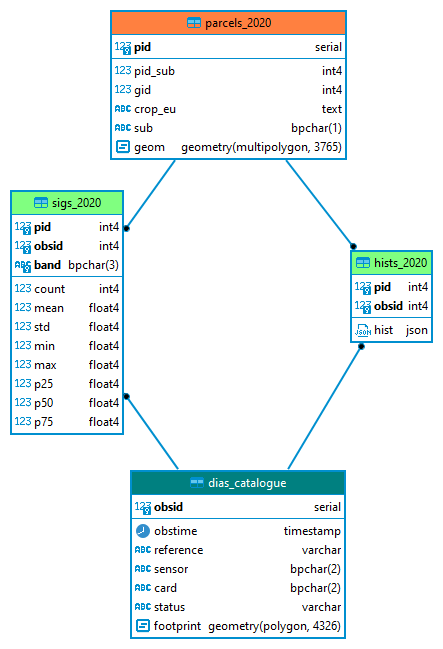

Parcels (e.g. parcels_2020)

Images metadata (dias_catalogue)

Signatures (e.g. sigs_2020)

Cloud flags histogram (hists_2020)

Parcels, signatures and histograms are year-specific because parcels change every year. The year used as suffix in the names is thus related to the year of the parcel definition, not to the year of the Sentinel data. For example, for the parcels declared in 2020 it is possible to extract the complete set of Sentinel 2 from 2016). The dias_catalogue table is not “timestamped” because it contains information about satellite images for all years. The evolution of parcels can be potentially tracked with a object-oriented versioned approach where only changing elements are recorded keeping track of all the history giving the possibility of queering both current and history record. This solution has not been implemented in the JRC CbM system.

In the Outreach database, a single dias_catalogue table for all countries is generated and another table (aois) stores the information about pilot areas, particularly their extension.

In the JRC CbM database, tables are organized in schemas. The general tables (e.g. dia_catalogue) are stored in the public schema. The other working tables are stored in a specific schema. In the case of the Outreach project, the country specific tables (parcels, signatures, histograms) are stored in a dedicated schema identified by the 2-letters ISO code of each country (for example hr in case of Hungary). When multiple Paying Agencies of the same Member State are joining, two additional letters are used to identify the PA. In this way it is easy to move data if the database has to be transferred to another local or cloud-based system.

The corresponding database data model (i.e. the conceptual representation of the real world in the structures of a relational database: tables and their relationships) in form of a simplified Entity-Relationship (ER) diagram is illustrated in Figure 1. It describes what types of data are stored and how they are organised. Columns used as primary key are indicated in bold and external keys are represented as connecting lines between tables. For each column is also indicated the data type.

Figure 1. Outreach database data model (core tables)

In some cases, the parcels table can be split in multiple tables according to the specific declaration (e.g. grazing: parcels_2020_g, mowing: parcels_2020_m, bare soil: parcels_2020_b) or to the pilot area (e.g. south: parcels_2020_s, north: parcels_2020_n). These differences can also be accommodated using specific columns in the parcels table (e.g. the sub column in the ER diagram) or using partitioned tables (see section on optimization of performances). If the parcels tables is divided in physical tables, an approach must be used to generate unique ID in the different tables that are referred to in the signatures and histograms or specific hist and sigs tables must be created (e.g. sigs_2020_g, hist_2020_g for grazing).

What is reported in the diagram and used in this demonstrative JRC CbM database is only part of the information involved in the CbM processing. The database can be easily extended to include for example additional data like present and past declarations, parcel classification according to traffic light classes and based on markers, other relevant environmental layers.

The information from different tables can be combined in a SQL statement to generate the complex information required for a specific task in form of a single table as a view. Practical examples are provided in the next section.

Database access

Server/client structure

The database architecture is based on a client-server structure. It is divided into two distinct components: a “server” program that provides a service and a “client” program that accesses the service.

The database server (PostgreSQL) is the back-end system of the database application and provides database functionality to client applications.

The client is usually an interface through which a user makes a request to the server through SQL commands. The client then converts the server’s response into the form requested by the user. Some examples of possible clients are PgAdmin, QGIS, Python through Psycopg2, R, Calc, Excel, PhP web interfaces, MS Access, ArcGIS.

In this architecture the data management and storage layer is physically separated from data use. Data are displayed by the clients but are stored in the DB and not duplicated.

A database server is installed on a physical computer (server), or in services in the cloud. Several database servers can be installed on the same machine. Several databases can be created within the same database server.

In the JRC CbM, the database is installed on the DIAS (although installation on a local machine is also an option). For more information on installation and initialization (e.g. creation of the image database table and enabling of the PostGIS spatial extension) of the database check the specific documentation.

Access parameters

In order to remotely access a database from any client, five parameters are usually necessary:

Server IP address

Port

(Database name)

User name

User password

In the Outreach project, if requested, these information are provided directly to users limiting access to the specific MS data sets, but in general DB access to the Outreach database is granted through an intermediate layer. In an internal MS/PA CbM system, we recommend to limit direct DB access to specific cases (i.e. database administrators, users with advanced skills in SQL and good knowledge of the database structure). In all the other cases an intermediate layer can be used, as in the case of the Outreach DB. This ensures performance and security by preventing poorly designed resource-intensive queries and facilitates access to basic users with no knowledge of SQL who can be guided by predefined queries offered as a simple graphical interface where only defined parameters need to be defined. This can be implemented using a RestFUL API, as in the case of the Outreach database.

User access policy

One of the most important functions of a database is the possibility of restricting access and possible operations (e.g. read, edit, insert, delete) on the data according to the different types of users, and in a differentiated manner for the different tables/rows of the database.

PostgreSQL manages access permissions to the database through ROLES. A role is an entity that can own objects and have privileges on the database. It can be an individual user or a group of users. Users can be grouped to facilitate privilege management: privileges can be granted to, or revoked from, a group as a whole simplifying a lot the management of the permission policy. This is done by creating a role that represents the group (for example administrators that can create/delete objects in the database, editors that can add, delete and update data in existing tables, and viewer that can only view all or part of the data but not change it), and then granting membership of the group role to individual user roles (administrators, analysts, final users). Database roles apply to all databases in a cluster, but permissions are then associated with the individual objects in each database. Each user is assigned a password together with the role to ensure data security. Access to the server itself can be restricted to certain IP addresses to further reinforce security. In PostgreSQL, permissions can go down to the single record level.

In the Outreach project, access is managed by the intermediate layer connected to a read-only role defined in the database (api_bot).

Data retrieval

To interact with a PostgreSQL database, it is not necessary to install PostgreSQL, but it is sufficient to install a client that connects to the database (server). The database with the data is instead physically installed on the server (e.g. in the DIAS). In this Section we show examples of connection of typical clients for spatial and non spatial data.

Only a limited set of information is provided here. Practical demonstrations are done during the MS trainings on JRC CbM. In addition, many tutorials are available on the Internet for further study.

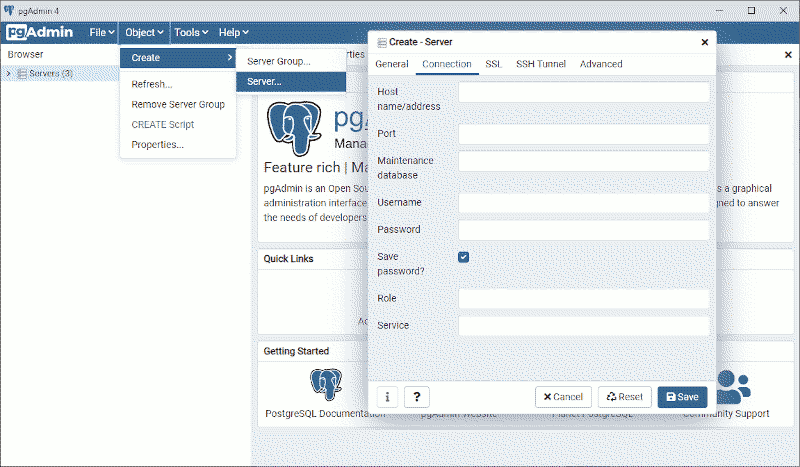

PgAdmin

PgAdmin is the most common graphical interface for querying data and managing PostgreSQL. You can view tables, query and download data, create new tables and views, edit and insert data, manage users, make backups. PgAdmin is an open source software and has very frequent updates. If you install PostgreSQL, PgAdmin is installed automatically.

PgAdmin is not the only client that you can use to manipulate data and objects in the database. An alternative to PgAdmin for database management is for example dBeaver (Community version), which is also very useful for generating ER diagrams.

Note that when you open PgAdmin for the first time, it asks you to create a password. This is not the password for accessing the databases, but only the password for accessing PgAdmin (since PgAdmin stores all database access passwords internally).

The PgAdmin interface is organised into 5 main sections (see Figure 2). The display of the elements can be optimised through the customisation options.

Figure 2. PgAdmin interface (numbers correspond to the description given below)

The 5 sections (or panels) are:

The menu bar

The toolbar

The tab bar

The tree menu with the database objects

Contents of the selected tab (object properties, tables, SQL editor)

Each part is used to perform different types of management tasks. The most common example of an operation is selecting a database object in the tree menu of panel 4 (e.g. a table) and displaying it in panel 5 (tab contents). The Help buttons in the bottom left-hand corner of each dialogue box open the online help for that box. Additional information can be accessed by navigating through the Help menu.

To create a connection to a database, select Object/Create/Server from the menu (or right-click on the Server icon in the menu and select Create/Server). A window opens in which the connection parameters must be entered. On the General tab you only need to enter a name (any name) for the connection. In the tab Connection you have to enter the IP address of the server, the port, the user and the password (see Figure 3).

Figure 3. Creating a connection to a database server

Once saved, the connection will appear in the menu tree by expanding the Server icon.

phpPgAdmin

There is an alternative to PgAdmin that does not require the installation of any local client because it uses a tool installed on the server: PhpPgAdmin. The interface is similar to PgAdmin, but it has less functionalities and can have problems with unstable connections, and it has limited development support.

Psql

Psql is the interactive terminal for working with PostgreSQL. It enables to type in queries interactively, issue them to PostgreSQL, and see the query results. Alternatively, input can be from a file or from command line arguments. In addition, psql provides a number of meta-commands and various shell-like features to facilitate writing scripts and automating a wide variety of tasks. For example \d list tables in the database and \d public.dias_catalogue list all the columns of the specified table.

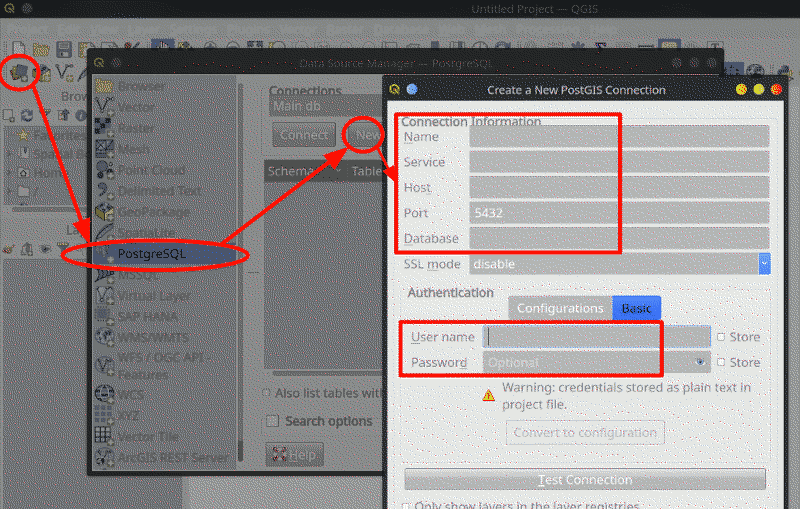

QGIS

PostgreSQL/PostGIS itself offers no tool for spatial data visualization, but this can be done by a number of client applications, in particular GIS desktop software like QGIS (a powerful and complete open source software). It offers all the functions needed to deal with spatial data and offers many tools specifically for managing and visualizing PostGIS data. Connecting to the database is pretty simple and the process is well documented. Data can be accessed in three steps: 1) create a connection to the db (the first time that you connect to the db, see Figure 4) using the database access parameters, 2) open the connection, 3) get the data.

Once the connection is created, you can use the dedicated DB Manager interface to explore, preview, visualize in the main canvas and also export spatial data (both vector and raster).

Figure 4. Connecting with the database from QGIS

Jupiter Notebook

Python is the suggested tool to access the JRC CbM and the examples provided in the system documentation are always based on it. Here a simple example of connection with the database using the Python psycopg2 is reported:

import psycopg2

import pandas as pd

from datetime import datetime

from ipywidgets import widgets

DB_HOST = 'IP_ADDRESS'

DB_NAME = 'DB_NAME'

DB_USER = 'YOUR_USER'

DB_PORT = 'PORT'

DB_PASS = 'YOUR_PASSWORK'

conn_str = f"host={DB_HOST} dbname={DB_NAME} user={DB_USER} port={DB_PORT} password={DB_PASS}"

conn = psycopg2.connect(conn_str)

getSampleList = f"""

SELECT * FROM dias_catalogue LIMIT 2;

"""

cur = conn.cursor()

cur.execute(getSampleList)

for rows in cur:

print(rows)

RESTful API

Several examples of interaction with the database using a RESTful api are provided in the dedicated JRC CbM documentation.

R

To import data from the database into R, simply use the code below (via the RPostgreSQL library):

library(RPostgreSQL)

drv <- dbDriver("PostgreSQL")

con <- dbConnect(drv, dbname="YOURDB",

host="IP_ADDRESS",

port="PORT",

user="YOUR_USER",

password="YOUR_PASSWORD")

rs <- dbSendQuery(con, "SELECT * FROM public.dias_catalogue;")

df <- fetch(rs,-1)

df[1:4,]

str(df)

dbClearResult(rs)

In the dbSendQuery command, you can insert any SQL code that will be executed by the database and then inserted into a dataframe as specified by the user (in the code above, df). In the example, the Sentinel metadata is loaded into the df dataframe.

It is also possible to import data into the database from R. You can find all the documentation and a list of the most interesting packages for working with a PostgreSQL database from R on the Internet.

Export and import data

There are different ways to export a table or the results of a query to an external file. The easiest one is to use the pgAdmin interface: in the SQL console select Query/Execute to file, the results will be saved to a local file instead of being visualized. Other database interfaces have similar tools. This can be applied to any query. For small tables, you can select the data visualized and do copy/past. For spatial data, the easiest option is to load the data in QGIS and then save as shapefile (or any other format) on your computer. Shapefiles can be imported into the database with a simple drag and drop operation in the QGIS interface. You can also import/export spatial data using ogr2ogr command from the GDAL library.

Form command line, you can use COPY (TO). COPY TO (similarly to what happens with the command COPY FROM used to import data) with a file name directly write the content of a table or the result of a query to a file, for example in .csv format. The file must be accessible by the PostgreSQL user (i.e. you have to check the permission on target folder by the user ID the PostgreSQL server runs as) and the name (path) must be specified from the viewpoint of the server. This means that files can be read or write only in folders ‘visible’ to the database servers. If you want to remotely connect to the database and save data into your local machine, you should use the command COPY instead. It performs a frontend (client) copy. \COPY is not an SQL command and must be run from a PostgreSQL interactive terminal PSQL. This is an operation that runs an SQL COPY command, but instead of the server reading or writing the specified file, PSQL reads or writes the file and routes the data between the server and the local file system. This means that file accessibility and privileges are those of the local user, not the server, and no SQL superuser privileges are required.

If you want to export a very large table or an entire database, you can use the pg_dump and pg_restore commands. These commands are used to create backup of part of all database content. The backup and restore can also be run using PgAdmin GUI. From pgAdmin, the operation of making a database dump is extremely simple: right click the database and choose Backup. There are a few output formats, apart from the default Custom one. With Plain the file will be plain (readable) SQL commands that can be opened (and edit, if needed) with a text editor. Tar will generate a compressed file that is convenient if you have frequent backups and you want to maintain an archive.

More information about the backup of the JRC CbM database is available in the db backup documentation.

Performance optimization

Basic optimization

Given the size of the tables generated by CbM, the time required to upload and retrieve the data can be long. The table that is typically most demanding in this respect and most used for analysis is the one with the signature (image statistics) per parcel and Sentinel scene/band. The number of records in this table is:

number of parcels * number of images * number of bands

To give an order of magnitude, if the parcels are 500,000, the Sentinel 2 images are 73 (an image every 5 days in a single year) and the bands are 7, the number of signatures records generated is about 260 millions. This corresponds to a size (without indexes and primary keys) of about 19 GB. As mentioned in the introduction to relational database, primary key is a compulsory element in a relational table. Technically, it is possible to create a table without a primary key, but this is a bad practice. Primary keys, where these involves columns commonly used to retrieve data, is the first optimization of time of data extraction (an index is created together with the primary key, and in case of multiple columns primary key the order of the columns matters). In the case of the sigs_YYYY tables, the primary key is based on the id of the parcel (pid), the id of the image (obsid) and the id of the band (band), that are good candidate as criteria to extract data. The combination of these three elements is unique for each row. In the case of our example, the primary key adds about 10 GB to the table size, for a total of about 30 GB.

Indexes are a common way to enhance database performance. An index allows the database server to find and retrieve specific rows much faster than it could do without an index, similar to what happens with a book summary. Indexes also add overhead to the database system as a whole, so they should be used sensibly. If a specific index is added for each of the three columns of the primary that are likely to be the most queries, the size increase of about 1.5 GB per index, for a total size of about 35 GB. Additional index can be added if other fields are used often in queries (a.g. a mean value used to detect markers). Indexes slow down upload of new data into the table, so in case of bulk insert this can increase the loading time. If this an issue, you can consider to drop the indexes and recreate them once the upload is finished).

To give an idea, for standard queries with/without keys and indexes (retrieve all the signatures for a parcel and a specific band) like:

SELECT pid, obsid, band, count, mean, std, min, max, p25, p50, p75

FROM sigs_2020

where pid = "mypid" and band = "myband";

the time needed to get the data is:

Table with primary key and indexes: 0.2 second

Table with primary key but no indexes: 2 second

Table with no primary key and no indexes: 2 minutes

while for a query based on the id of the image (all parcels for a specific image/band) performances are:

Table with primary key and indexes: 2 second

Table with primary key but no indexes: 2 minutes

Table no primary key and no indexes: 30 minutes

These numbers depend on many factors on top of table size, but they give an idea of the importance of basic optimization.

Advanced optimization

If the performance achieved with indexes are not satisfactory, other actions are possible. This can be the case when many more parcels and images are managed. In this case, additional actions that can be taken. Among the others:

Tune configuration parameters: PostgreSQL default configuration is tuned for wide compatibility rather than performance. Parameters can be changed in the postgresql.conf file to optimize the configuration for each specific case. This file is located in the data subfolder inside the folder where you installed PostgreSQL. Many tools exist to help this operation (for example: PGtunes and PGconfig.

Partitioned tables: splitting what is logically one large table into smaller physical pieces can improve query performance dramatically in certain situations, particularly when most of the heavily accessed rows of the table are in a single partition or a small number of partitions. Partitioning effectively substitutes for the upper tree levels of indexes, making it more likely that the heavily-used parts of the indexes fit in memory. These benefits will normally be worthwhile only when a table would otherwise be very large. The exact point at which a table will benefit from partitioning depends on the application, although a rule of thumb is that the size of the table should exceed the physical memory of the database server.

Table Clustering: when a table is clustered, it is physically reordered based on the index information. This speeds up queries that are based on the criteria used for clustering. Clustering is a one-time operation: when the table is subsequently updated, the changes are not clustered. That is, no attempt is made to store new or updated rows according to their index order and tables must be periodically reclustered.

Increase hardware resources: important improvements can be achieved if more hardware resources are available (e.g. CPU, RAM). If a database is very big in size, pure optimization might not be enough if the hardware is weak. In this case adequate resources are usually needed.

Multiple-Server Parallel Query Execution: even if this features in not available natively in PostgreSQL, some companies provide commercial support for this kind of optimization where a query is execute in parallel on multiple server.